Is OpenAI’s Preparedness Framework better than its competitors’ “Responsible Scaling Policies”? A Comparative Analysis

Reminiscent of the release of the ill-named Responsible Scaling Policies (RSPs) developed by their rival Anthropic, OpenAI has released their Preparedness Framework (PF) which fulfills the same role. How do the two compare?

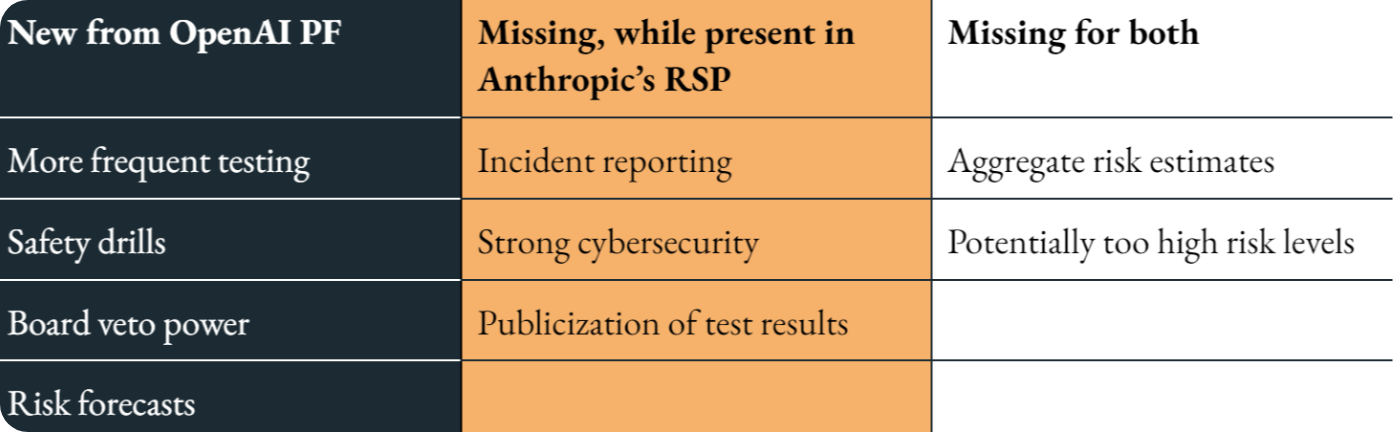

There are significant improvements of OpenAI’s Preparedness Framework (PF) over Anthropic’s RSPs:

- It runs the safety tests every 2x increase in effective computing power rather than 4x, which is a very substantial and well-needed improvement. We already have evidence of emerging capabilities like in-context learning substantially changing the risk profile & fully emerging over a 5x increase in compute (Olsson et al., 2023). This suggests that a change in the risk profile could occur with a threefold increase or less in computing power, potentially rendering Anthropic’s scaling strategy less safe compared to OpenAI’s approach.

- It adds measures relevant to safety culture and good risk management practices, such as safety drills, to stress test the company culture robustness to emergencies, a dedicated team to oversee technical work, and an operational structure for safety decision-making. Improvements at the organizational levels are very welcome to move towards more robust organizations, analogous to High Reliability Organizations (HROs), which are organizations that can deal with dangerous complex systems successfully.

- It provides the board with the ability to overturn the CEO’s decisions: the ability of the board to reverse a decision adds substantial guardrails around the safety orientation of the organization.

- It adds key components of risk assessment such as risk identification and risk analysis, including for unknown unknowns, which evaluation-based frameworks don’t catch by default.

- Forecasting risks, notably through risk scaling laws (i.e. predicting how risks will evolve as models get scaled) which is crucial for preempting the training of hazardous models rather than evaluating their dangers post-training.

However, the PF is missing some critical elements that were present in the RSPs:

- Lack of commitment to publicize the results of evaluations.

- Lack of an incident reporting mechanism, which is key in order to have a feedback loop to increase the effectiveness of safety practices.

- The commitments for infosecurity and cybersecurity are less detailed and hence likely weaker, effectively increasing short-term catastrophic risks significantly.

Both frameworks still miss some key parts of risk management:

- There is still no aggregate risk level, measured in impact and likelihood. There is no measure on the actual impact on society i.e. “the chances that our AI system causes more than $100M in economic damage is lower than 0.1%”, which could be estimated through risk management techniques that rely on expert opinions, such as the Delphi process (Koessler et al. 2023). It is highly damaging given that many believe that the risk levels that those companies propose are unbearably high.

- The risk level that those companies bring to society is likely very high. Some experts have said those companies have created a greater than 1% likelihood of catastrophic risks (chances it causes >80M deaths, i.e. 100 times more deaths than Covid). As an example, a model that can help an undergraduate create a bioweapon can be trained under both frameworks.

These frameworks prioritize different catastrophic risks

Our analysis is that OpenAI’s preparedness framework deals with accidental risks better than Anthropic’s RSPs thanks to the more frequent assessments and the greater emphasis on risk identification and unknown unknowns. On the other hand, we believe that it deals less well with short-term catastrophic misuse risks, mostly due to the lack of details in the commitment of OpenAI to significant infosecurity measures which raises questions about how effectively they can prevent the leak of models with dangerous capabilities. One of the largest risks that 2024 or 2025 models might present is one where a system released publicly could increase catastrophic risks substantially when misused by malicious actors. Cybersecurity and infosecurity is the most important component to prevent this kind of disaster. This relative positioning of OpenAI towards accidental risks, compared with Anthropic’s positioning towards misuse, reflects the respective worries of the CEOs of companies. Sam Altman, the CEO of OpenAI, is notably concerned about accidental extinction risks and has shared few public concerns about misuse risks. On the other hand, Dario Amodei, the CEO of Anthropic, has shared fewer concerns about accidental risks, but significantly more concerns about misuse risks.

OpenAI and Anthropic are both significantly ahead of their competitors in risks and safety

OpenAI and Anthropic have both voluntarily committed to frameworks that demonstrate their superiority over their competitors, not only in capabilities and risks, but most importantly in safety. The policies presented by other companies for the AI Safety Summit look pale next to those. In stark contrast with the communication of some companies without much technical grounding, Anthropic and OpenAI have written rather detailed commitments and should be applauded for that. Nevertheless, we should not accept those policies as sufficient. Scaling at the current pace endangers the stability of society and our species in a way that only policymakers can prevent by enacting more ambitious risk management policies across the ecosystem. In the spirit of moving the Preparedness Framework increasingly towards sufficient policies, we suggest some improvements.

What could be improved in the Preparedness Framework:

- Enhanced independent scrutiny through Safety Advisory Group (SAG) members external to the company. We believe that the SAG would be substantially more relevant if some of its members were external to the companies in order to further reduce the number of blind spots that the company may have.

- Measuring safety culture. Beyond the commitments OpenAI already took, measuring safety culture using processes established in other fields like nuclear safety would provide a valuable feedback loop enabling OpenAI to become over time an institution more adequate to build human-level AI safely.

- Running a Delphi process to predict the magnitude and likelihood of key risks. For most large-scale risks, economical societal, or existential, running a Delphi process, i.e. a method to aggregate risk experts’ opinions, would enable the organization to evaluate the magnitude and likelihood of risks. This would provide interpretable indicators that allow society to decide deliberately what risk level it is willing to accept. (Koessler et al., 2023)

The Preparedness Framework is helpful for the AI industry while being clearly insufficient. Policymakers need to act and remedy both the incompleteness of the existing frameworks of OpenAI and Anthropic and the absence of guardrails among most stakeholders currently developing AI systems.

A more technical version of our suggestion for improvements was sent to OpenAI.